Real time mood expression through music while playing

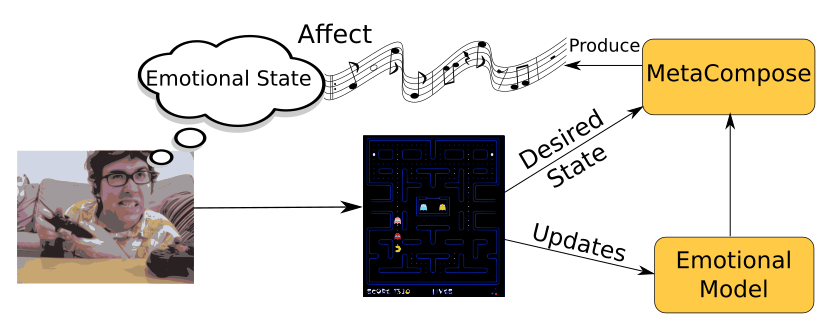

Associate Professor Marco Scirea, SDU Metaverse Lab, has been developing a system called Metacompose. It is an affective expressive real-time music generator that is designed to be used in videogames.

What does that mean? It means that it can be used to produce music in real-time, absolutely from scratch, that can be charged with some affective meaning (moods and maybe emotions).

Imagine that you're playing a game, you'd like the music to react and reflect actions that you do in game, right? But what about emotion? You'd like the game to reflect for example the mood of the game or the stressfullness of a situation. I believe music is nothing if we strip it away from its function as a communication tool, and in music we communicate through emotion. If we can express moods through music we can reflect how the player's emotional state is, reinforcing it, or try to manipulate it. The final goal is to provide the player with a better experience and the designer/developer with a tool that can be used to guide user experience through music.

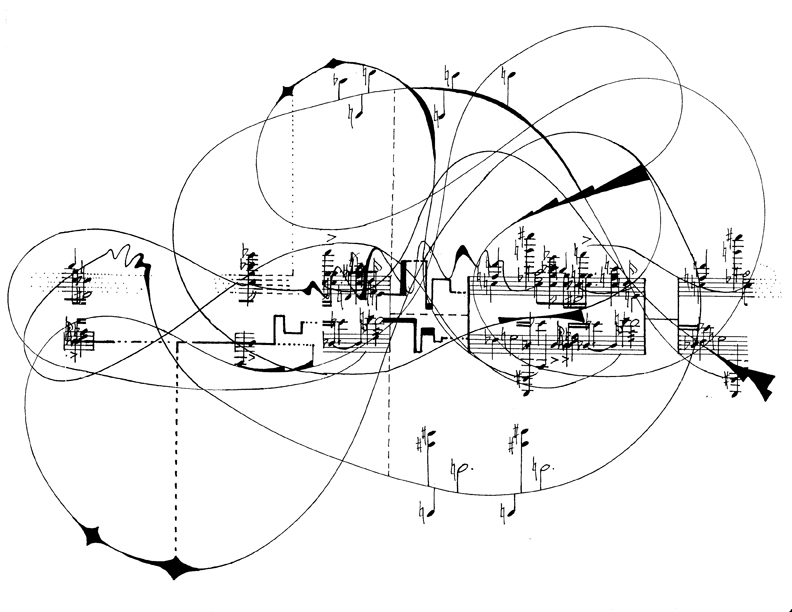

How does it work? This image is from John Cage's book Notations, this book aimed at showing how conventional music notation is at best an approximation of music and is very limiting in the sense that the score is usually considered the "music".

cage

As we want Metacompose to be able to change in real-time according to the player's actions we needed to have a more flexible representation: therefore we generate abstractions of music, that are then interpreted by some "players" to create music, as in a jam-session.

The expression of affective states is separate from the abstraction generation: the instruments change the way they play to match a desired mood. This is done accordingly to Scirea's mood expression theory.

Find out more in the paper: Scirea Marco, Julian Togelius, Peter Eklund, and Sebastian Risi. "Affective Evolutionary Music Composition with MetaCompose" in Genetic Programming and Evolvable Machines.